本篇是上一篇 – [筆記] Python 爬蟲 PTT 八卦版 的衍生文章,主要是加上如何爬取表特版文章和自動下載其圖片。這是一個簡單的爬蟲程式,故相關的除外狀況可能沒有完全包含進來,還請見諒。

課程名稱

Python 網頁爬蟲入門實戰:https://bit.ly/2U6wElg

對於爬蟲初學者而言,算是滿不錯的搭配教材。如有需要,你可以搭配「Python:網路爬蟲與資料分析入門實戰」這本書來看。

課程相關文章

指令

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 |

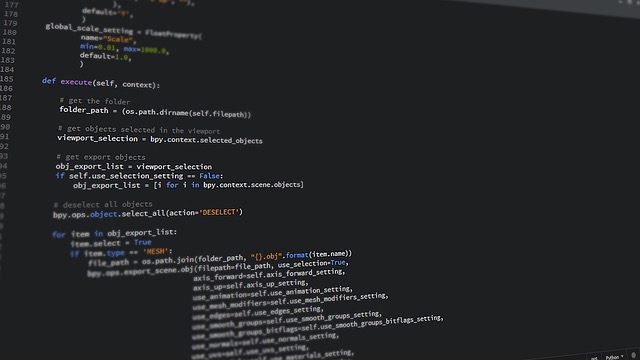

import requests import datetime import json import re import os import urllib.request from bs4 import BeautifulSoup PTT_url = "https://www.ptt.cc" def get_webPage(url): res = requests.get(url,cookies = {'over18': '1'}) if res.status_code !=200: print("Invalid URL",res.url) return none else: return res.text def get_articles(page,date): soup = BeautifulSoup(page,'html5lib') #上一頁連結位置 prevURL = soup.select('.btn-group-paging a')[1]['href'] #取得文章清單 articles = [] divs = soup.select('.r-ent') for article in divs: if article.find('div','date').text.strip() == date: #取得推文數 pushCount = 0 pushString = article.find('div','nrec').text if pushString: try: pushCount = int(pushString) #將字串轉換成數字 except ValueError: if pushString == "爆": pushCount = 99 elif pushString.startswith('X'): pushCount = -10 if article.find('a'): title = article.find('a').text #取得文章標頭 href = article.find('a')['href'] #取得文章連結 author = article.find('div','author').text #取得作者名 articles.append({ 'title':title, 'href':href, 'pushCount':pushCount, 'author':author }) return articles, prevURL if __name__ == "__main__": allArticles = [] # 取得表特版頁面 currentPage = get_webPage(PTT_url+'/bbs/Beauty/index.html') # 取得電腦端時間資料 todayRoot = datetime.date.today() # 更新為 PTT 時間格式,並去掉開頭的'0' today = todayRoot.strftime("%m/%d").lstrip('0') articles, prevURL = get_articles(currentPage,today) #當有符合日期的文章回傳時,搜尋上一頁是否有文章 while articles: allArticles += articles currentPage = get_webPage(PTT_url+prevURL) articles, prevURL = get_articles(currentPage,today) #擷取文章總覽 print('今天有', len(allArticles), '篇文章') threshold = 10 #定義熱門文章門檻 print('熱門文章(> %d 推):' % (threshold)) for article in allArticles: if int(article['pushCount']) > threshold: print(article['title'],PTT_url+article['href']) # 再次進入文章,進行圖片爬取 url = PTT_url+article['href'] newRequest = get_webPage(url) soup = BeautifulSoup(newRequest,'html5lib') # 找尋符合的 img 圖片網址 imgLinks = soup.findAll('a',{'href':re.compile('https:\/\/(imgur|i\.imgur)\.com\/.*.jpg$')}) # 依照文章標題建立資料夾 folderName = article['title'].strip() # 去除多餘空格 os.makedirs(folderName) if len(imgLinks)>0: try: for imgLink in imgLinks: print (imgLink['href']) fileName = imgLink['href'].split("/")[-1] urllib.request.urlretrieve(imgLink['href'], os.path.join(folderName, fileName)) except Exception as e: print(e) |